From objects to data¶

Reading a book gives us information (and fun!) but it is a slow method. If we want to read many books, we need a lot of time at our disposal.

So how can we read many books in a short time and still get out some information?

Entrance Distant reading and Co.

Distant reading requires as a first step, that the library we are interested in is machine readable. Once we have made a picture from every page of a book, we can use OCR to embedd the text in the image. In a second step we can try some automated processes to extract the structure of the text on a page, and save the result in the TEI format.

In the following, we discuss some aspects of availability and biases in these steps and start with some introductory exercises.

OCR pitfalls and opportunities¶

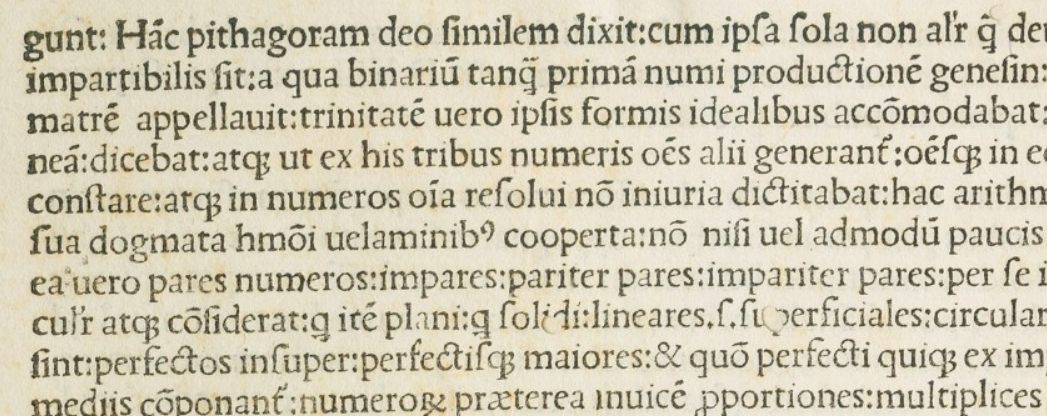

OCR = Optical character recognition

Make sources machine-readable

Most common programs good for “modern” (i.e. printed!) texts only (Abby / Teseract)

Many other approaches, see e.g. OCR4all

Tesseract is Open Source and useful tutorials can be found easily, see e.g. here.

For texts spanning a wide period of time, OCR quality will vary a lot

Example 1¶

As a first example for data quality, have a look at Leo Bergmanns Das Buch der Arbeit from 1855. Written in Fraktur, the text is still recognized and readable. However, we can find several errors in the OCR and the text structure is not recognized very well.

Scanning quality¶

OCR quality is improved by higher resolution images

But what about file size? ➡️ For 400dpi, one page eq. 40 MB

To research paper / materiality of sources, even higher resolution might be necessary

Long term preservation of raw data raises questions on data quality

Existing collections¶

There are already many existing text collections. Depending on the initial research question, it can be much faster to use them as the starting point. To capture the full structure of a text, many collections use the Text Encoding Initiative (TEI) standard based on XML. See e.g. TEI-C for an introduction.

LOC: Crowd-sourced transcriptions

Textgrid: German texts in TEI format

Newton Project: Works and correspondence of Isaac Newton

Verfassungsschutzberichte: All public reports from the Office for the Protection of the Constitution

Arxiv: Physics preprints of the last decades

Exercises¶

Exercise 1¶

Use a PDF you are interested in and convert it to TEI using Grobid

URL: GROBID

What result do you get?

Can it be converted, why not?

Is the information of authors/publishers correct? Only if you use an academic paper..